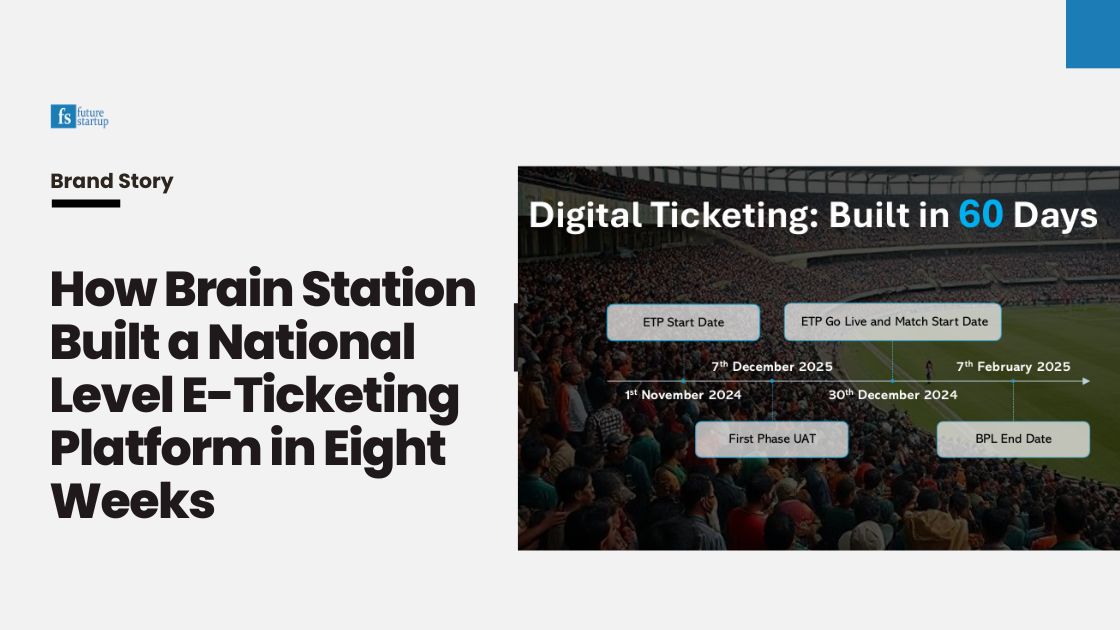

Imagine being handed a project with a ticking clock: eight weeks to build an e-ticketing platform for the Bangladesh Premier League (BPL) 2025, a 40-day, 24-event, and 46-match cricket extravaganza drawing millions of fans.

The system needs to handle 25,000 average tickets per day, validate them at chaotic stadium gates across 3 venues (Dhaka, Chittagong, and Sylhet) throughout the entire tournament, and stop black-market sales—all with spotty internet and a tentative idea of what to build.

Most companies would balk. Multiple vendors had already declined. Brain Station 23, Bangladesh’s home-grown global software firm, said, “Let’s do it.”

As Raisul Islam, who leads a 190-person business unit at Brain Station 23, puts it, “No one else was willing to take it on. We thought, ‘Inshallah, we can do this.’” His reasoning mixed professional calculation with national pride.

This is the story of how the Brain Station 23 team pulled it off, told step by step, with lessons that resonate far beyond tech. It’s about solving messy problems under pressure, leveraging experience, and delivering value when it matters most.

In late October, Relief Validation Limited (RVL) approached Brain Station with a project. The BPL T20 2025 Tournament, starting December 30th 2024, needed an online ticketing platform to sell and validate tickets for 46 matches across 3 venues in Dhaka, Sylhet, and Chittagong.

The goal? Sell tickets online and prevent black-market ticket sales using QR codes tied to RVL’s government-authorized digital signature system.

The catch? They had less than eight weeks with tentative requirements. “They reached out to us towards the end of October with just an idea,” Islam recalls. “Sell tickets online, validate with QR codes. That was it.”

Brain Station’s first meeting with the RVL took place on October 25th. The meeting gave a sense of the scale of the challenge: 25,000 average tickets per match, one or two matches daily, and peak demand that would test any system.

Brain Station, with a track record of building high-traffic systems, saw an opportunity in the challenge. The company had built systems for European partners for hotel rooms, concerts, and sports bookings with thousands of simultaneous users. “We’d done ticketing and booking platforms before,” Islam says. The team understood the specific challenges when thousands of users simultaneously compete for limited inventory. It solved similar caching problems that arise when tracking seat availability in real-time across multiple channels.

"We worked on similar high-traffic booking systems in Europe where we had used .NET," Islam explains. "We had the advantage of having our booking engine ready.”

However, this wasn't about code reuse—though that helped. It was about having seen this movie before. The European projects had taught the Brain Station team which technical approaches worked under extreme load and which failed. More importantly, they had learned to distinguish between problems that looked impossible but weren't, and problems that actually were impossible.

The confidence to take on a challenging project came from this accumulated knowledge. As Islam puts it: "Working on large systems and with big giants in Europe gave us confidence and morale that we could do this kind of work."

On November 1st, the company started working on the project on a verbal agreement. The timeline was so tight they couldn’t wait for the formal contract, which would come two weeks later.

On November 1st, Brain Station rallied a 21-person team in two days, pulling from their 190-person business unit. This rapid mobilization revealed an organizational capability that smaller vendors couldn't match. The bench strength allows for the quick formation of specialized teams without disrupting ongoing work. “We had the scale to move fast,” Islam says.

The team structure reflected the project's complexity: backend developers for the core platform, frontend developers for the user portal, two mobile app teams (one for ticket buyers, one for gate checkers), and a QA team for testing.

Sub-teams formed with clear roles: backend handled architecture, mobile teams tackled apps, and QA planned load tests. “We set up daily stand-ups and used tools like Jira to stay aligned,” Islam explains.

But assembling the team was the easy part. The harder challenge was figuring out what to build.

The initial idea the team received was a broad concept of selling BPL tickets online with QR code validation. The first challenge was turning that loose concept into a plan. This ambiguity could have been paralyzing. Instead, the Brain Station 23 team treated it as an opportunity to shape the solution. Instead of waiting for a perfect spec, they worked with the RVL team to make a concrete plan, asking, “What’s the real problem?”

Drawing on its European experience, the company proposed a comprehensive platform rather than a single-use application. Stadiums would be dynamically configurable—galleries could be added or removed, capacities could change, and new venues could be integrated without code changes.

"From the beginning, we considered this as a platform and made it configurable so that in the future, they could configure it as they wished," Islam explains. “A stadium might have five galleries one day, four the next. We made it flexible so organizers could add venues like Sylhet or Chittagong themselves.”

This architectural decision—building for flexibility rather than just meeting the immediate need—would prove crucial. RVL continues to use the same platform for all subsequent matches with very minimal changes. It also reflected a deeper philosophical approach: The Brain Station 23 team wasn't just executing requirements; they were partnering to solve the underlying business problem. “We built it for BPL’s 46 matches but made it evergreen for future events,” Islam says.

Daily calls with RVL became exercises in collaborative design. "We'd hold daily calls," Islam explains. "They'd say, 'We need this,' and we'd ask, 'Why? What's the user impact?'" The constant collaboration shaped features like dynamic seat mapping and QR code integration, ensuring the platform solved the core issues—black-market tickets—while adding value like flexibility.

By November 3rd, the team had a feature specification that was both comprehensive and focused: event creation, ticket sales, payment processing, and validation, all configurable.

Rather than asking for detailed specifications upfront, the Brain Station team used the ambiguity as an opportunity to become true partners in solution design. This approach requires deep domain knowledge. You need to understand the business problem well enough to propose valuable features. When it works, it creates much stronger client relationships than simple build-to-spec arrangements.

Development began on November 4th. The team chose a microservices architecture with .NET for the backend—built on their proven European booking engine rather than starting from scratch—and Angular for the frontend. “Backend built the core—event and seat configs—while frontend made the user portal,” Islam says.

This decision encapsulated everything Brain Station 23 had learned about balancing speed with reliability. The booking engine had been battle-tested in handling similar volumes. The Brain Station team already had the experience of solving complex caching and queue management problems that arise when thousands compete for limited inventory. More importantly, the team saw various failure modes and fixed them.

"Our booking engine, built for European systems, was already tested for high traffic," Islam explains. "It handled caching and real-time syncing well."

The architecture split functionality across independent services: event management, ticket sales, payment processing, and validation. Each service could scale independently, fail independently, and be deployed independently—crucial when you're iterating rapidly under time pressure.

But adapting the booking engine proved more complex than simple reuse. The European systems assumed reliable internet connectivity and sophisticated payment infrastructure. Bangladesh's reality required different assumptions. Mobile networks collapse under crowd pressure. Payment gateways fail at scale. Users include people buying their first digital ticket alongside tech-savvy urban fans.

UI design reflected these constraints. "We made it intuitive for all kinds of users," Islam notes. Simple flows, clear visual cues, minimal text, and forgiving error handling became design principles. The buyer app prioritized simplicity: users selected matches, galleries, and seats, paid via gateways, and got QR-coded tickets.

Payment integration revealed another layer of complexity. “Payments were tricky,” Islam recalls. Multiple gateways were required for redundancy, each with different scaling characteristics. "Gateways couldn't always scale, so we added 10-minute retry windows," Islam recalls.

The team used daily sprints—developers pushed code to Git, reviewed in real-time via pull requests, and demoed to RVL daily. For peak loads, they implemented queues and caching. “Thousands requesting the same seat risks overselling,” Islam explains. “We cached capacity data, queued requests, and synced databases in the background.”

The QR code system integrated RVL’s digital signatures, ensuring each ticket was unique. “We tested integration early, catching bugs like failed signature verifications,” Islam says.

By November 10th, a working prototype was ready with core features like seat selection and payment flows functional.

Ticket validation presented a unique challenge. Infrastructure limitations made real-time validation against central servers impractical.

The first validation system was built on ideal assumptions—scan the QR code, check the ticket against the central database, verify it hasn't been used, then mark it as consumed. Under controlled testing conditions, this approach worked perfectly. Under stadium conditions, each validation took 30-60 seconds.

The math was terrifying. With four gates serving 25,000 fans, and each gate processing tickets at one-minute intervals, it would take over six hours to admit everyone. "You can't have delays," Islam realized. "A minute per scan means chaos.”

Each match drew 25,000 fans through three to four gates, with each gate handling 5,000-6,000 people.

The solution required rethinking fundamental assumptions about how validation should work. Instead of real-time database queries, the team developed a hybrid offline-online system.

Critical validation data—ticket authenticity, match details, usage status—was cached locally on validation devices. “We stored data locally for fast scans,” Islam explains. The validation logic could run entirely offline, checking cryptographic signatures and local databases in fractions of a second.

But offline validation created a new problem: duplicate prevention. If each device operated independently, how could they prevent the same ticket from being accepted at multiple gates? “Offline devices don’t know if a ticket’s used elsewhere,” Islam says.

The answer was background synchronization—devices would communicate usage updates when network connectivity allowed, maintaining eventual consistency across the entire validation system. Background syncing every minute via API calls is added. In simulated tests, duplicates persisted—around 2-3% of scans. “We’d see errors like ‘ticket already used’ failing to flag,” Islam says.

The engineering was elegant in theory. Implementation revealed layers of complexity. How often should devices sync? What happens when sync fails? How do you resolve conflicts when the same ticket appears to be used at multiple locations simultaneously?

"These numerous logical operations and calculations, checking them in real-time within a fraction of a second, were very challenging," Islam explains.

Developers worked late, tweaking the app’s SQLite database to cache ticket data. They iterated daily, tightening sync logic and adding conflict resolution.

By December 25th, the team had a stable validation app that could process tickets in sub-second timeframes while maintaining reasonable duplicate prevention. They knew real stadium conditions would test it in ways that controlled environments couldn't simulate.

Validation wasn’t solved at once. Brain Station tackled speed, then duplicates, with daily code pushes and tests. “We’d deploy a fix, test with 100 tickets, then scale,” Islam says.

Break big problems into iterative steps. The key is treating limitations as design challenges rather than obstacles.

Testing a system designed to handle 25,000 concurrent users presents obvious challenges. Brain Station 23's QA team focused on the scenarios they could simulate: race conditions during ticket purchases, payment gateway failures, and database performance under load.

"We caught race conditions—the same seat sold twice," Islam recalls. The fixes involved stronger database locking mechanisms and more sophisticated queue management. But each fix created new potential failure points, requiring additional testing cycles.

The validation system presented even greater testing challenges. The team ran load testing. But how do you simulate the chaos of 25,000 fans arriving simultaneously in a test environment? The team focused on the logic they could verify: cryptographic signature validation, duplicate detection algorithms, and sync conflict resolution. But perhaps the most important testing insight was recognizing the limits of pre-deployment validation.

"We did the automated load testing. However, the context in automated load testing under a controlled environment vs the real loads in a public environment where we have very limited control is totally different," Islam acknowledges. This recognition shaped their approach to launch. Rather than expecting perfection from day one, they built systems for rapid iteration and real-time fixes.

Device standardization emerged as another crucial issue during testing. Initially, the plan was to use ticket checkers' personal phones for validation. Testing showed the flaw in this approach. Devices came with varied processing power and memory. "Some had 2GB RAM, slowing after an hour," Islam notes. Lower-end devices would crash during extended validation sessions, creating bottlenecks at gates.

The solution was dedicating standardized devices to validation. An operational change that required coordination with RVL but dramatically improved system reliability.

User Acceptance Testing began December 7th, with RVL staff testing the complete flow from ticket purchase to gate validation. The feedback revealed interface issues that lab testing had missed: seat maps loaded too slowly, payment confirmation was unclear, and the validation app's feedback to checkers was ambiguous.

These fixes happened in real-time. "We'd code, test, and demo daily to RVL," Islam explains. Bugs like payment failures (20-30% payment failure) led to retry logic tweaks.

The team worked 12-14 hours, seven days a week, driven by ownership. “People felt this was for the country,” Islam says. “They’d say, ‘We’ll work weekends to finish.’”

Brain Station's testing approach acknowledged the fundamental limitation: no amount of lab testing could fully simulate stadium conditions. Instead of pursuing perfect pre-deployment testing, the team focused on building systems that could be monitored, diagnosed, and fixed in real-time.

Sometimes, the best preparation for unpredictable conditions is building adaptable systems rather than trying to predict every scenario.

Ticket sales went live December 23rd-24th. The system handled the initial load well. But the real test came on December 30th when matches began and validation systems faced their first stadium crowd. The results were humbling. "First match: 500-1,000 duplicates," Islam recalls. "People photocopied PDFs, sneaking through gates."

This represented a 2-4% failure rate—technically impressive for a system built in eight weeks, but problematic for a security-critical application designed to prevent black-market ticket sales. The issue wasn't a technical failure but human creativity that the team hadn't fully anticipated. Fans discovered they could print PDF tickets and try different gates, gambling that the validation systems wouldn't catch.

The duplicate problem also revealed deeper issues. The validation system was working correctly, but not always reliably. It was detecting duplicate attempts and rejecting them. However, the rejection process was confusing for both fans and gate checkers. Legitimate ticket holders sometimes found their tickets rejected due to sync delays or database conflicts, creating customer service nightmares at stadium entrances.

The team monitored these problems in real-time. "We'd call checkers mid-match: 'What's happening at gate 3?'" Islam explains. Dashboard showed scan times, duplicate rates, and throughput at each gate. When problems emerged, they could quickly determine whether issues were technical, operational, or behavioral.

The checker adaptation curve proved steeper than expected. "It took three matches for them to adapt," Islam notes. The validation process was entirely new—checkers had to learn not just how to use the app, but how to handle edge cases, explain problems to fans, and maintain crowd flow under pressure.

Meanwhile, the technical team was pushing fixes nightly. “Day one, we saw sync delays,” Islam says. They cut sync intervals to 30 seconds, deploying updates overnight. Conflict resolution logic was tightened. Error messages were clarified. Device-specific optimizations were deployed to prevent crashes during extended use.

The improvement was systematic and measurable. "By match two, duplicates fell to 200-300. By match three, tighter logic and device upgrades dropped duplicates to under 100; by match four, single digits." “We hit near-zero by tweaking sync conflict handling,” Islam says.

The success didn’t come from a perfect launch execution, but from building systems that could be monitored, diagnosed, and improved rapidly during live operations.

The ability to iterate quickly under pressure became more valuable than getting everything right the first time.

On December 15th, just as the validation system was stabilizing, RVL requested a change—the option to issue some physical tickets to distribute to customers of some partners.

"Our system was online-only," Islam explains. The entire architecture assumed digital-first sales with validation tied to online purchase records. Converting to a mixed online-offline model required rethinking core assumptions about ticket generation, distribution, and validation.

The Brain Station 23 team took one week to implement a solution. They worked through the night developing a system that could generate downloadable, printable tickets while maintaining integration with the validation system. QR codes had to work identically whether purchased online or printed offline. The validation app had to handle both ticket types seamlessly.

"Code was pushed at 3 AM, tested by dawn," Islam recalls. The technical solution worked. The Brain Station 23 team had solved the technological challenge of mixed online-offline ticketing in a week.

While the project was a success, the offline ticket challenge showed the importance of understanding and planning for the entire operational ecosystem and maintaining development flexibility so that you can maneuver when needed.

BPL 2025 concluded with measurable success: nearly 13 crore taka in revenue compared to its historical 4-6 crore range. User app ratings averaged 4.2-4.5 stars despite the complexity of introducing digital ticketing to diverse audiences. The validation system, after its rocky start, achieved near-zero duplicate rates while maintaining sub-second processing times.

But the more significant success was architectural: the organizers continued using the platform for subsequent matches—Bangladesh vs. Pakistan, Bangladesh vs. Netherlands, Bangladesh vs New Zealand series through ETP—without requiring major modifications. The early decision to build a configurable platform rather than a single-use application paid dividends.

Post-tournament analysis revealed both strengths and weaknesses in Brain Station 23's approach. The rapid iteration capability that enabled real-time fixes during matches was crucial for success. The team's willingness to work unsustainable hours during the crisis period enabled delivery that wouldn't have been possible otherwise. But the same intensity that made crisis delivery possible wasn't sustainable for routine operations.

"People felt this was for the country," Islam explains. "They'd say, 'We'll work weekends to finish.'" This motivation was genuine and effective, but it raised questions about whether the development model could be replicated for purely commercial projects lacking the same emotional resonance.

The technical platform continued evolving after launch. A January update added analytics for gate throughput, helping optimize staffing for future events. Payment gateway integration was refined based on transaction failure patterns observed during live operation. The validation system received performance optimizations that reduced scan times by an additional 20%.

Brain Station 23 and RVL began planning to scale the platform nationally. "We're eyeing more events, like concerts," Islam notes. The success with BPL 2025 provided proof of concept for broader market deployment.

The Brain Station 23 story of building BPL ETP offers insights that extend beyond software development to any situation requiring building complex systems under extreme pressure with incomplete information.

Pattern recognition creates a competitive advantage. Brain Station’s European experience provided both technical foundations and the confidence to take on challenges that others rejected. This wasn't about superior skills but about having seen similar problems before and knowing which solutions worked under pressure.

Partnership and collaboration beat specification: Rather than demanding detailed requirements upfront, Brain Station 23 used ambiguity as an opportunity to become true partners in solution design. This approach requires domain expertise but creates much stronger client relationships than build-to-spec arrangements.

Constraints drive innovation: The unreliable internet connectivity that seemed like a limitation forced the development of more robust systems than might have emerged otherwise. The most elegant solutions often emerge from the tightest constraints.

Flexibility and iteration are everything: No amount of planning could have anticipated exactly how fans would game the system or how quickly checkers would adapt to new processes. Success came from building systems that could be monitored and improved rapidly during live operations.

The project also showed the costs of crisis-driven delivery. The team's voluntary commitment to 12-14-hour days for two months enabled success but wasn't sustainable for routine operations.

For Brain Station 23, the project validated its positioning as a company capable of competing globally while solving critical local challenges. "We proved we can build world-class systems here," Islam reflects.

The platform continues operating today, handling BCB's ticketing needs and serving as proof of concept for broader national deployment. In eight weeks, the Brain Station 23 team built more than software—they demonstrated a model for rapid delivery under extreme pressure while building sustainable foundations for future growth.

As Islam puts it: "When you see your work impact a national event, it hits differently." The technical achievement was impressive, but the lasting impact was showing what becomes possible when experience, preparation, and determination converge under pressure.